By zero hour contract, I don't mean you turn up for work, do basically nothing and go home with £300 as disgraced Lord Hanningfield claims is quite common in the House of Lords. Zero hour contracts are ones where the employer has no obligation to give you work i.e. you can turn up for work, get told there and then whether you're needed and can be sent home without pay for the day.

Some groups such as the Chartered Institute of Personnel and Development have claimed that Zero hour contract workers are happy whilst others such as the TUC demand tougher regulation of these contracts. To quote the TUC

"The growth of zero hours contracts is one of the reasons why so many hard-working people are fearful for their jobs and struggling to make ends meet, in spite of the recovery"

Into this mix Vince Cable acknowledge the clear signs of abuse but decided not to ban them on the grounds they offer 'welcome flexibility'.

So are they good or bad? Well, in my view it's a form of exploitation which should not be welcomed but that's not the point of this post. Whenever you hear someone talking positively about zero hour contracts or providing surveys and market research proclaiming the benefit then the one question you should ask is :-

"If zero hour contracts are so good, I assume you're on one?"

If they're not, then show them the hand and walk away.

I, would of course welcome the use of zero hour contracts in the House of Lords. We could take a quick online vote as to whether we needed them today or not. Clearly, if Hanningfield's claims turn out to be true then many are just turning up to claim expenses.

I'll finally note that Hanningfield's defence of his actions were "I have to live, don't I?" - well, there's always income support and housing benefit which is what the rest of the country uses. If you need the money Hanningfield then get on your bike and get a job. From the sounds of it there's a lot of highly flexible and 'happy worker' zero hour contracts out there.

A node between the physical and digital.

The rants and raves of Simon Wardley.

Industry and technology mapper, business strategist, destroyer of undeserved value.

"I like ducks, they're fowl but not through choice"

Thursday, December 19, 2013

Cloud Standards and Governments

As any activity evolves from product (+rental) to more commodity (+ utility services) due to the forces of competition then a number of factors come into play. There is a period of re-organisation as a new market emerges, a co-evolution of practice due to the changing characteristics of the evolving activity along with inertia caused by past practices and past means of operation. We see this with abundance in the cloud market today.

Under normal circumstances a set of defacto standards would appear in the market and a period of consolidation then occurs. Ideally, one common standard emerges but in some cases we get a limited set of alternatives. As the market continues to develop then multiple implementations of the defacto standards tend to form and a competitive market is created.

It doesn't always happen like this, sometimes competition is restricted by the development of a monopoly / oligopoly. In such circumstances when user needs are not met or competition is restricted in a mature market then it may become necessary for Governments to force a set of standards onto that market in order to create a level playing field e.g. document formats.

There are also variations in the type of market that is formed. For a free competitive market (as opposed to a constrained or captured market) then the means of implementing the standards must be open to all. This is why the ability to reverse engineer an implementation is often critical to forming a competitive market. In the most ideal form the standard would be implemented in an open source reference model.

IaaS however is not a mature market but an emerging market for which we already have a defacto standard being formed in the AWS APIs. The rapid growth of AWS is testament to its meeting of user needs but nevertheless for reasons of buyer / supplier relationship and second sourcing options then a competitive market of many providers is desirable. Fortunately, for the core parts of the AWS APIs (there is a long tail of functionality) there are already multiple open source implementations from Eucalyptus to CloudStack to Open Nebula and even parts of OpenStack. APIs themselves are also principles in US / EU law despite the best efforts of Oracle. Hence we have the basic pieces that are necessary to form a competitive market of AWS clones and possibly also GCE clones.

For an emerging market this is a reasonable position to be in at this stage. If anything Governments should push forward to consolidating the market by reaffirming APIs as principles and even limiting any disguised software patents that may limit re-use. This would all be positive.

However, vendors have inertia to change particularly when that change threatens their business. So today, I increasingly hear calls from vendors for 'Open Standards' in IaaS on the grounds of meeting user needs for interoperability. However, what I normally hear from users is they'd like a number of alternative providers of the AWS APIs i.e. homogeneity in the interface but heterogeneous providers. There is a conflict here because the 'Open Standards' proposed are not AWS APIs whilst users rarely say they need another set of APIs other than AWS. However, this difference in needs isn't going to stop vendors trying to pull a fast one by trying to ban competitor's interfaces through Government imposed 'Open Standards'.

So when your local vendor comes and starts talking to you about the importance of 'Open Standards' ask yourself some basic questions ...

1) Is this an emerging market?

2) Is there a defacto standard developing in the market?

3) Is there one or more open source reference implementations of the defacto?

If the answer to all three is yes (as is the case with IaaS) then there is no need to force a standard onto the market and everything seems to be healthy. A good response to the vendor would be to tell them to come back with a service offering that provides the defacto.

If the vendor insists that you need their 'open standard', then ask yourself ...

1) Is the vendor 'open standard' different from the defacto?

2) Does the vendor's products / services offer the 'open standard'?

3) Is the vendor trying to create an advantage for themselves regardless of actual user needs because they cannot compete effectively in the market without this?

In all likelihood, the call for 'open standards' in an emerging market is just an old vendor play of 'if you can't beat them, try and ban them'.

Caveat Emptor.

Tuesday, December 17, 2013

On Politics, IT and Strawmen

Shadow Minister Chinyelu Susan 'Chi' Onwurah describes how project management in Gov IT has been politicised. The article states a coalition portrayal of 'Waterfall as monolithic Labour' and 'Agile as dynamic, entrepreneurial Tory' and how to 'think that the solution to effective ICT deployment is to simply change your project management methodology is arrogant and naive'. It then goes on to describes how Labour will undertake this 'transformation with more humility' requiring 'diverse methodologies' and learning from 'the process itself'.

This all sounds very reasonable but there is a problem with this portrayal. The UK GOV IT approach is already on the path to becoming more balanced.

With any course correction, an over steer is a natural part of shifting an organisation away from one all encompassing method to a more balanced approach. I already see signs of the shift to a balanced approach happening which is remarkable in such a short period of time. For example, whilst HS2 might be seen as controversial in some circles, it is showing signs of strategic understanding and IT leadership which I've rarely seen rivalled in the private sector. Rather than being 'one size fits all' shop with little of poor understanding of why action is taken, the CIO has been using a mapping technique to break down large scale projects and determine what methods are appropriate. Its future appears neither as an Agile / XP / Scrum Development nor a Six Sigma / Prince / Waterfall shop. Its future appears more balanced, more the use of the right methods where appropriate.

Unfortunately this doesn't help the argument that Labour will make a difference because it's difficult to claim a difference (a more balanced view) when this is already happening. The portrayal proposed in the article is unfortunately a necessary instrument of what we know as a strawman argument. If you were going to have two 'extremes' of past and present then in my opinion they would be :-

Under previous administrations, UK Gov IT could be characterised by an over reliance on large outsourcing arrangements, inefficiency in contracts, poor data and focus on user needs, poor development on internal engineering skill, extensive use of single and highly structured methods of project management, lots of discussion on open source / transparency / open standards but little execution.

Under the current administration, UK Gov IT can be characterised by a movement towards both insourcing where appropriate and use of SMEs, a greater challenge in contracts, a focus on user needs and collection of transaction data, co-operation with other governments, a focus on recruitment of engineering talent, use of more appropriate management methods from scheduling systems such as Kanban to a plurality of development techniques, a focus and action on open source, open data, transparency and open standards.

The change from these two states is not due to some political ideology but a desire of many people (both internal and external) to make things better and to reduce some of the excesses. Onwurah claims pointedly that Mark Thompson who responded to the Shadow Minister's speech also wrote the Conservative Technology Manifesto - something which I'm not aware of and a bit surprised about. I happened to work with Mark on a 'Better for Less' paper which had some input into the changes and that paper was driven solely by a focus on this principle of making things better.

For the record, until the worst excesses of spin of the Blair years, I had always voted Labour. Due to my disillusionment with Labour, I have subsequently voted Liberal Democrats. I have never once in my life voted Conservative. I don't personally agree with many of their policies. But then I wasn't asked to be involved in writing the paper because of my political beliefs but because the group felt I had something to contribute. Politics never came into it.

But neither manifestos nor politics really made this change possible. As Mark pointed out 'the important point is that one would be hard pressed to find a single outcome in Labour’s Digital Britain to which those in the Government Digital Service (GDS), or their digital leaders network, are not already deeply committed'. The real change, something familiar to all of us that have worked in the open source world, was strong leadership and the UK GOV IT appears to have had this with Francis Maude.

I do fear that the worst excesses of sharp suited management consultants are itching to come back with the panacea of “big IT” to achieve joined-up government. In my view, we would be wise to keep off that path and continue to create the more balanced future that seems to have already started.

I have had concerns in the recent past that Labour has yet to show the example of strong IT leadership that will be needed going forward. If it was up to me then I'd keep Francis Maude moving the changes forward regardless of political persuasion. Maybe Shadow Minister Chi Onwurah can provide this future leadership - I simply do not know. What I do know, from a competition viewpoint, is IT is more important to our future industry than petty debates and if this is what the future leadership might mean - politicising the debate and creating strawman arguments - then my concern grows.

I hope I'm misunderstanding what is happening here but the politicisation of project management methods helps no-one.

Friday, December 06, 2013

Once more unto the breach, dear friends, once more ...

If you've been paying attention then you'll have noticed that the UK Government has had a fairly torrid time of trying to implement a policy for open standards.

It eventually won that fight despite efforts from numerous groups such as the BSA, large US companies apparently attempting to influence the process, questions raised over the role of one of the chairman and software patent heavyweights piling into public meetings. At the end of several rounds of shenanigans and lobbying, the UK Gov was finally able to announce a comprehensive policy towards open standards. It was finally able to do what the only respected peer reviewed academic paper on the subject said was a good thing to do - adopt the policy.

However, this fight was just to get the policy approved. We should of course expect many more fights when it comes to specific open standards. Why? Well, it has all to do with money or more importantly the ability of certain companies to help themselves to large amounts of your and my money.

To explain why, let us first understand why we need standards. One of the fundamental purposes of standards is to provide you with choice. If two systems use the same standards then you can switch between both and this switching encourages competition. The reason why UK Gov has a preference for open standards is that it also has a preference for a fair and competitive marketplaces i.e. if the standard is owned by someone or encumbered with patents then that person can limit competition in the market.

Shouldn't we just therefore make everything into a standard? No. There is a cost with standardisation in that it limits innovation at the interface of the standard, hence the implementation of standards is only suitable for activities that have become commonplace and mature.

But surely the marketplace as it matures will define its own standards? Yes. These are called the defacto standards and in most cases they should be adopted. However, in a mature market where the defacto doesn't meet some user need or encourage competition then Governments might need to introduce standardisation to create a competitive market.

Can you give me examples? Certainly. Two examples spring to mind.

1) In the case of cloud computing you have an evolving market where defacto standards are developing. As an evolving market it is too soon for any Government to attempt to implement standards.

2) In the case of documents and word processing systems (like Open Office, Libre Office and Microsoft word) then you have a highly mature and established market. However, in this case despite there being a defacto there is not easy switching between a competitive market of products and this is most clearly shown when people talk of the cost of transfer from the defacto to another.

So why should I care as a vendor? If you are the defacto provider for a mature marketplace and there is a significant cost for people to transfer off your system then you've in effect created a captured market and this can be very lucrative. You won't experience the normal competitive pressures of a free market, you can charge high prices knowing that most won't wish to incur a cost of transfer. This actually creates an incentive for you to lock people further into your system and you'll try and stop anything which threatens this such as open standards.

So why should I care as a customer? Well, if it's a mature market with a defacto and there are limited alternatives unless you incur a high cost of transfer then you're likely to be paying well over the odds. What's operating is not a free market but a captured one and your exit cost from the existing defacto is simply a long term liability which will keep on increasing. In such cases, you should be in favour of open standards because that's the only way you'll get a fair and competitive (known as 'free') market and limit future liabilities.

So that gives some basic background on open standards. The real question is why do I bother mentioning this now? Well the UK Government has just launched a process to select open standards for government documents. I fully expect the lobbying machine to be out in full on this one as what's at stake is oodles of cash and control of a market.

Microsoft Office is obviously the defacto in a well established and mature market which has little differential between product offerings but also high costs of transition. The market has all the appearance of a captured market with weak competition.

However, doesn't Microsoft Office 2010 uses .docx which is Microsoft Office Open XML Format and therefore an open standard? Alas no.

Microsoft Office 2010 provides a number of formats (docx, pptx, xlsx) that collectively are called Office Open XML format (OOXML or OpenXML). This file format was submitted to ISO after intensive lobbying including accusations of rigging and it known as the OpenXML standard (ISO/IEC 29500 adopted in 2007).

Alas, this standard was broken into two parts. There is the standard itself, which is known as strict OpenXML and was accepted by ISO (international standards organisation) and then there is transitional OpenXML which was supposed to be a transitional file format for Microsoft to ease the removal of some of the past closed source legacy from their file format. Of course, Microsoft Office 2010 implemented transitional OpenXML but only reading of strict OpenXML.

So when you use Microsoft Office 2010 to send a document in an OpenXML format such as .docx to someone who uses Libre Office which reads and writes strict OpenXML (as defined by ISO) and they edit the document and send you back a .docx file, then what you see is often a corrupted file or something which can only be read and has to be converted.

You probably think that something is wrong with their software. Well, this problem isn't because of their word processor but instead Microsoft Office 2010 didn't implement strict OpenXML except for reading. It certainly claims that .docx is OpenXML, and that OpenXML is an open standard approved by ISO but the version of OpenXML that ISO approved (strict) is not the same version of OpenXML that Microsoft Office 2010 .docx uses (transitional). This means every other system has to reverse engineer the transitional OpenXML format that the default .docx uses and that's never perfect.

So let me be crystal clear, Microsoft Office 2010 didn't even fully implement the open standard it created and was approved by ISO after its own intensive lobbying. It did promise to provide reading / writing of this format in the future.

Hang on that's old news .... doesn't Microsoft Office 2013 now implement reading / writing of strict OpenXML? Well, the latest release of Microsoft Office does now implement strict OpenXML, unfortunately the default .docx is still transitional OpenXML, you have to specifically select strict OpenXML when you save the file (which is buried in the options) and of course, in order to use Microsoft Office 2013 you need to be running Windows 7 or Windows 8.

Hang on that's old news .... doesn't Microsoft Office 2013 now implement reading / writing of strict OpenXML? Well, the latest release of Microsoft Office does now implement strict OpenXML, unfortunately the default .docx is still transitional OpenXML, you have to specifically select strict OpenXML when you save the file (which is buried in the options) and of course, in order to use Microsoft Office 2013 you need to be running Windows 7 or Windows 8.

So basically, yes you can have the open ISO standard strict OpenXML if you upgrade your operating system, buy the latest version of Microsoft Office 2013 and remember to save in the right format. It must be noted that whilst Microsoft Office 2013 does now provide support for strict OpenXML and even includes group policies for this, the default is .docx which is using Microsoft's own version of an open standard (transitional OpenXML) but without actually being that open standard (strict OpenXML). It's all a bit messy and confusing.

So what does this mean in practice? Well, let us assume that the UK Gov chooses the ISO approved strict OpenXML as an open document standard. First, that means Gov departments would need to upgrade to Microsoft Office 2013 which means upgrading to Windows 7 or 8 and all the changes needed to applications (NB. Microsoft XP is out of support in April 2014). They'd also have to set group policies so that the default was strict OpenXML.

However, let us suppose you create a document, a .docx file and sent it to someone else who happens to be using Microsoft Office 2010. Unfortunately though they could read the .docx file (Microsoft Office 2010 provides read support for strict OpenXML) they couldn't write / edit such a document without changing it to another type of .docx (the default transitional OpenXML). Hence you might send out strict OpenXML but you could easily get back transitional OpenXML though both files are called .docx.

Now given that some companies / organisation are still just in the midst of rolling out Microsoft Office 2010 then you're going to have all sorts of problems trying to introduce use of strict OpenXML as the .docx format and in practice the default transitional OpenXML format will rule for many many years to come. At best, you're going to end up with a messy estate of .docx files some of which are strict format and some of which are transitional format but all are called .docx.

Fortunately if you're using Microsoft Office 2013 this is ok and the more messy the environment, the more incentive there is to all upgrade (including the Operating System). Alas, there are only a limited number of alternative companies providing word processing capable of reading / writing Microsoft Office .docx (transitional format) as it currently stands because of the effort needed to reverse engineer transitional OpenXML (remember strict is the standard).

For example Libre Office does provide the ability to save files as two different .docx formats - one for "Microsoft Word 2007/2010 XML (.docx)" which is the reverse engineered transitional OpenXML format and one for "Office Open XML (.docx)" which is the strict OpenXML format that is the ISO standard.

The upshot of this, for many it'll just seem easier to stick with Microsoft, upgrade the OS and Office package, accept a messy estate and in all probability stick with transitional OpenXML as the .docx format. So much for a competitive market and open standards then.

However, there is an alternative - ODF.

So what is ODF? It stands for Open Document Format, it covers a number of office formats (.odt, .ods, .odp) and is an ISO/IEC standard 26300 adopted in 2006. It is supported by multiple technology providers including AbiWord, Adobe Buzzword, OpenOffice, Atlantis Word Processor, Calligra Suite, Corel WordPerfect Office X6, Evince, Google Drive, Gnumeric, IBM Lotus Symphony, Inkscape exports, KOffice, LibreOffice, Microsoft Office 2010 onwards, NeoOffice, Okular, OpenOffice, SoftMaker Office and Zoho Office Suite.

Microsoft also provides support for ODF however as it says "Formatting might be lost when users save and open .odt files". Brilliant.

One of the big advantages of word processing / spreadsheet and presentations systems like Libre Office is they have close to feature parity with Microsoft Office but they also aren't tied to a specific operating system i.e. you can get Libre Office for Ubuntu, MacOSx and Windows.

Microsoft also provides support for ODF however as it says "Formatting might be lost when users save and open .odt files". Brilliant.

One of the big advantages of word processing / spreadsheet and presentations systems like Libre Office is they have close to feature parity with Microsoft Office but they also aren't tied to a specific operating system i.e. you can get Libre Office for Ubuntu, MacOSx and Windows.

So why doesn't Microsoft just adopt ODF? In a nutshell, control of a market. Why simply give up a captured market if you don't have to and especially if you can persuade people that the exit costs that your product has created aren't actually an ongoing liability. If you can use those exit costs to persuade people against moving, explain that your product really is 'open' with OpenXML, add in a complex mess of strict and transitional formats then you can hopefully can get people to stay put and just upgrade both Office and the operating system. You get to keep the captured market intact!

Oh, and by the way, those exit costs are significant. Microsoft's own people estimated that adoption by UK Government of ODF as the standard would cost in excess of £500 million. What that means is we're currently locked into an environment which it will cost £500 million to escape from (assuming the figure is correct) but what they fail to mention is that 'liability' is unlikely to decrease over time. It's that 'liability' which keeps us paying for new versions of Office, new versions of OS, application upgrades and also prevents a truly competitive market for what is fundamentally a common and well defined activity - writing documents etc.

Oh, and by the way, those exit costs are significant. Microsoft's own people estimated that adoption by UK Government of ODF as the standard would cost in excess of £500 million. What that means is we're currently locked into an environment which it will cost £500 million to escape from (assuming the figure is correct) but what they fail to mention is that 'liability' is unlikely to decrease over time. It's that 'liability' which keeps us paying for new versions of Office, new versions of OS, application upgrades and also prevents a truly competitive market for what is fundamentally a common and well defined activity - writing documents etc.

This is where Government really has to step in because it has power and influence in the market.

This is clearly a mature but captured market which the introduction of an open standard will encourage greater competition, reduce long term liabilities such as exit costs and therefore benefit users. There is no reason why Microsoft can't upgrade previous versions of Office to write strict OpenXML (they've had six years) and change the default .docx to this, however it's probably just not in their interests to do so. The pragmatic choice for Government would therefore seem to be ODF.

If you select ODF then there is also no reason why Microsoft cannot sell office products based upon ODF (which it supports) and if not Microsoft then there's lots of other potential vendors (see list above) including open source solutions who will. Since Portugal and other Governments have already gone down a route of establishing ODF as the standard then it also doesn't make much sense for the UK Gov to create its own island of technology standards.

If you select ODF then there is also no reason why Microsoft cannot sell office products based upon ODF (which it supports) and if not Microsoft then there's lots of other potential vendors (see list above) including open source solutions who will. Since Portugal and other Governments have already gone down a route of establishing ODF as the standard then it also doesn't make much sense for the UK Gov to create its own island of technology standards.

But then again, standards are rarely about pragmatism and more about vested interests. Now this is the reason why there will be a fight because you're talking of an established defacto with a captured market potentially being forced into giving up that control and competing in a free market. I expect to see lots of intense lobbying over why OpenXML should be the standard despite the default .docx of Microsoft Office 2010 and 2013 being transitional OpenXML and not the approved ISO open standard strict OpenXML.

At the end of the day this is about word processing, spreadsheets and presentations. We don't need umpteen file formats for this and certainly not two different formats for .docx such as strict and transitional. This will be claimed to be a necessity for reasons of transition but we've had six years and no end in sight to this mess. In reality this is all about prolonging control of a captured market and almost nothing about end user needs.

This is why Governments must act but of course, it'll need you to help counter the well funded lobbyists that are likely to appear - just like last time.

Before I go, I thought I'd better clear one last thing up. I happen to love Microsoft Office. I use it all the time on MacOSX in particular Excel. However, I'd rather see Microsoft compete on providing me with a better experience / product which was based on ODF (an open standard) in a competitive market than what is happening today. I do understand the temptation to claim something is an open standard when it is not, I do understand the gameplay and how standards can be used to control the market.

I hope, maybe with a change of leadership that Microsoft learns it can compete purely on having better products and not by attempting to create and exploit lock-in for what is a common activity. I fear that this standards debate will just become another example of Microsoft rallying troops into the fray with the usual cries of being "open", concerns over exit costs that ignore long term liabilities and funded "white papers" masquerading as academic work etc.

Hence in the next post, I'll talk about some of the common tactics we're likely to see.

At the end of the day this is about word processing, spreadsheets and presentations. We don't need umpteen file formats for this and certainly not two different formats for .docx such as strict and transitional. This will be claimed to be a necessity for reasons of transition but we've had six years and no end in sight to this mess. In reality this is all about prolonging control of a captured market and almost nothing about end user needs.

This is why Governments must act but of course, it'll need you to help counter the well funded lobbyists that are likely to appear - just like last time.

Before I go, I thought I'd better clear one last thing up. I happen to love Microsoft Office. I use it all the time on MacOSX in particular Excel. However, I'd rather see Microsoft compete on providing me with a better experience / product which was based on ODF (an open standard) in a competitive market than what is happening today. I do understand the temptation to claim something is an open standard when it is not, I do understand the gameplay and how standards can be used to control the market.

I hope, maybe with a change of leadership that Microsoft learns it can compete purely on having better products and not by attempting to create and exploit lock-in for what is a common activity. I fear that this standards debate will just become another example of Microsoft rallying troops into the fray with the usual cries of being "open", concerns over exit costs that ignore long term liabilities and funded "white papers" masquerading as academic work etc.

Hence in the next post, I'll talk about some of the common tactics we're likely to see.

Wednesday, November 27, 2013

A spoiler for the future - Bitcoin

Bitcoin is a marvel of our time, a weapon which exploits social engineering to astonishing effect. The delivery mechanism is greed, the payload is a laissez faire economic system for the unwary. I thought rather than go through the details of how it operates or where it impacts (much of which I've mapped and is related to commoditisation of the means of transfer) that I'd just get right to the conclusion of what I think is going to happen.

So be warned, as per the 3D printing, this is a spoiler for the future which I unfortunately expect to play out over the next thirty years (barring some strong intervention e.g. Government) ...

1. Bitcoin will continue to grow and whilst there will be some wobbles, as it pasts a $1,000 per bitcoin a slew of articles will be published on whether this is "the future of currency" and what is its potential for disruption of existing financial markets including currency arbitrage. Early financial instruments around bitcoin will be developing. China will invest in developing exchanges.

2. As bitcoin races towards $10,000 per coin (expect lots of wobbles along the way) many more Governments will start to introduce legislation formally recognising the currency. A number of articles will be written in equal measure on how bitcoin could threaten taxation systems and how bitcoin represents the rise of laissez-faire capitalism. Numerous books will be written on the "democratisation of currency", "the new laissez-faire", "a new form of capitalism" etc. There will be a very faint weakening in treasury bonds and the ability of Governments to raise funding. New practices will start to emerge from wealth hiding, wealth pooling, new fund raising and equalisation of global investment opportunities. Many organisations in the financial and related industry will still dismiss the impact of bitcoin as fairly irrelevant. China will invest in the development of the industry, encourage external use of bitcoins and have introduced legislation regarding internal use of bitcoins (i.e. outside its borders is ok but severe limitation of internal use as a currency). This will include a register of Chinese users with state identifiable UUIDs (bitcoin addresses) and control of the exchange rate of bitcoin to the Renmimbi (a non free floating currency) with severe sanctions for non state approved exchanges.

3. The volume of worldwide transactions will continue to rise exponentially with bitcoin hitting $50,000 per coin. Governments will become slightly concerned about black / grey markets and a perceived potential reduction of income tax receipts as we head towards a cash based system where the cash is untraceable. Whilst bitcoin transactions are public, the use of single addresses per transaction, mixing services, volume and other techniques will mean the effort required to identify connections between addresses and hence trace identity becomes exponentially hard. Concerns over tax receipts, Government debt, a slight weakening of gilts and increased difficulty in raising funds will add pressure for more austerity. There will be a number of high profile investigations on bitcoin exchanges and articles will appear with provocative headlines such as "benefit scrounger is a millionaire bitcoin hoarder". Some attempts will be made to control circulation of bitcoins which will be countered through well funded lobbyist groups including large banks that have adapted to this new model. A journalist will also write an expose of "How many bitcoins does your MP have?'. The currency itself will start to appear in wider circulation with articles on "How to buy your car with bitcoins?". New roles and services will start to emerge e.g. a wealth detective, used to hunt down sources of wealth. The battle to hide and find wealth will heat up and new start-up investment funds with new banking platforms will emerge. China will grow as a centre for development of bitcoin and related instruments. A significant fraction of Chinese external investment will be encouraged and conducted in bitcoins and there will be several high profile cases of severe sanctions for unofficial use of exchanges. In China, through a combination of the great firewall, official UUIDs, sanctions and official exchanges it will become difficult to use bitcoins internally to trade through non official routes.

4. As bitcoin reaches $250,000 per coin, other 'old world' currencies (with the exception of the Renmimbi) will show the first signs of weakening. Many articles will be written on "How I lost a bitcoin fortune" to "The bitcoin billionaires". Financial instruments (currency swaps, derivatives etc) will be well established with bitcoins and along with a growing OTC market there will be a growing market of publicly traded instruments. Most pension funds will be investing in bitcoins and the currency will now be so invasive into our systems, driven by greed, that it becomes too big too fail and impossible to stop. Most governments will have realised they face a future threat of reduced taxation as the public black market slowly starts to becomes unmanageable and the laissez faire economy looms. Articles will be written on "You can't arrest everyone?" exploring how widespread untaxed transactions have become. The effort required to now trace identity through public transactions will have become extraordinary due to highly automated privacy features taking advantage of mixing services. To add to this, along with mounting debt there will be significant weakening in the gilts market as market starts to price in the future effects of bitcoins. New solutions for taxation will appear with books published on topics from "No representation without taxation - managing a voluntary taxation system" to "Reducing the State" to "A Return to Land Tax?". Numerous currency based industries (e.g. exchanges, investment funds) that have not adapted to this world will be facing the early signs of oncoming disruption. Whilst institutions will describe 'shock' at the impact of bitcoin and some will exhibit signs of denial, there will be a rapid movement of organisations into the space. Some journalist will write an article "Is the end of the Dollar / Euro in sight?" whilst another will write "Is this the end for Government as we know it?"

5. As bitcoin surpasses $1M per coin (NB not in 'todays' money but allowing for decline in $ value), most currencies will show weakening. Many governments will start to introduce alternative taxation mechanisms as the ability to determine income and / or wealth rapidly diminishes. Despite various moral authorities preaching on the subject, the use of bitcoins in an effective black market for everyday goods will become a social norm. Traders who operate with old style cash, declaring earnings and paying taxation will find themselves at a commercial disadvantage. Economists will promote pragmatism and acceptance of the laissez faire economy. China will continue to heavily promote and invest in bitcoins, with most external trade conducted in such. Internal to the economy, the Renmimbi will dominate and have gained considerable value over other currencies. However, in other Governments conflict will result particularly with the welfare state since it will become almost impossible to determine individuals actual wealth. Concepts such as progressive taxation and means tested welfare will be unenforceable in practice. Alternative mechanisms will be proposed such as "everyone gets a basic salary" from Government, however with tax receipts in decline, reducing ability to raise funds, inability to measure an individuals wealth then this salary will be low. Endless articles in the unforgiving press will talk of "welfare state funding for bitcoin millionaires". In desperation, taxation will turn towards mechanisms such as Land Tax forcing the poorer to sell property. However, the shortfall will be such that new measures of austerity and reduction of the state are needed and further taxation systems such as "Citizenship tax" become considered. With the dismantling of the state system and mechanisms of redistribution then extreme centralisation of wealth will occur as the ROCE (return on capital expended) increases with C.

6. As bitcoins close on $5M per coin, most currencies will be in severe decline. Government's will be in significant free fall regarding finances. Austerity measures will have taken the route of unprecedented and radical decimation of the state - everything from state provided healthcare to coastguards to income support to education will be practically gone replaced with numerous forms of bitcoin based insurance. If you can't afford it then you won't be able to gain access to it. There will be no state help as the state can neither fund universal care nor determine whether you deserve support. Severe forms of ghettoisation will appear. The argument will be provided that as we live and compete in a global economy then we have little choice. Articles will be written on "How Laissez faire will grow new industry", "The great economic experiment" and "The growth of user choice" but in reality the American dream (sic nightmare) will have become a nightmare for most. Social mobility will be at a historic all time low, the cross generational poverty trap will become an accepted norm and a harder and less forgiving society will emerge with little or no welfare state. Taxation will be reduced to "property" and "citizenship" i.e. no representation without taxation (NB, as soon as you introduce this including the extreme $1 tax for 1 vote then a few court cases later and companies will have more voting rights than most ordinary people). Social cohesion will be weak with often draconian imposition by the "funded" state on individuals seen as non citizens. The social contract will have been re-written, the rubicon crossed and many Wat Tyler's will have emerged. A new global super class of the exceptionally wealthy will emerge. Governments will be powerless to act as bitcoins will be embedded in every aspect of trade. With severe reduction of the state, technological innovation in those economies will implode over the next economic cycle (k-wave) exacerbating an already vicious cycle. The key exception to this will be China with its tight control of the Renmimbi and limitation of bitcoins for external trade. China by this point will be the capital and innovation superpower with extensive and virtually untraceable investments in all countries, a mixed economy model and high levels of social mobility, social cohesion and stability.

7. Some monetarist economist somewhere will go back and read Adam's Smiths work on the importance of taxation, redistribution and government. Some member of the Chicago "nut house" of economic blah blah will still claim it's a success and the solution is more "laissez faire".

Of course, the future is uncertain. Let's hope that none of this comes to pass.

As you can guess, I'm not a fan of bitcoin. If left unchecked then I find it has the potential to undermine the importance of Government which is actually not good for competition and not good for the market. I hope none of the above happens and would rather see bitcoin disappear in a puff of history. However, don't confuse my disdain for bitcoin with opposition to the technology behind it. I view the blockchain as having huge and positive potential in many industries. I'm a fan of the blockchain, I just can't stand bitcoin.

7. Some monetarist economist somewhere will go back and read Adam's Smiths work on the importance of taxation, redistribution and government. Some member of the Chicago "nut house" of economic blah blah will still claim it's a success and the solution is more "laissez faire".

Of course, the future is uncertain. Let's hope that none of this comes to pass.

As you can guess, I'm not a fan of bitcoin. If left unchecked then I find it has the potential to undermine the importance of Government which is actually not good for competition and not good for the market. I hope none of the above happens and would rather see bitcoin disappear in a puff of history. However, don't confuse my disdain for bitcoin with opposition to the technology behind it. I view the blockchain as having huge and positive potential in many industries. I'm a fan of the blockchain, I just can't stand bitcoin.

Tuesday, November 26, 2013

The oncoming bloodbath for private cloud

Back in 2008, I talked about the development of the "private" cloud market as a transitional phase between the then and a future hybrid market of multiple public providers. Of course, back then I took the view that the hardware vendors in the space would create a price war to force natural fragmentation of the market i.e. commoditisation does not mean centralisation.

Unfortunately, due to the exceptionally poor gameplay of many vendors in this space, then in this instance commoditisation does seem to mean centralisation to Amazon, Google and MSFT.

The key date for me for the decline of the "private" market was 2016. A number of factors from inertia to energy provision to establishing alternative options to cycle time for investment pointed to that time. Given the amount of effort put into OpenStack, the number of vendors involved, the marketing, the investment and the hopes pinned to it ... I tend to now think of 2016 as a bit of a bloodbath for private cloud.

Do I think it will be just those three vendors? Nope. I expect there to be clone environments (e.g. AWS public clones). There's a lot of mileage to be made here and a very specific user need for alternative suppliers which operate in the same way.

Do I think it's all over for private cloud? Nope. I still hold to my view of 2008. Private cloud will head towards niches but admittedly highly profitable niches. The most successful of those niche players will be those that co-opt the public standard i.e. AWS. Hence, I'm quite upbeat about groups such as Eucalyptus, CloudStack and those elements of OpenStack who have a focus on being an AWS clone.

Do I think it's all over for VMware and OpenStack? Pretty much. I take the view that VMware will experience a slow and painful downward slope and whilst OpenStack will exist after 2016 it won't do so in its current guise. In my opinion, many of the high profile companies involved will be facing a grim future. There will be some high value buy-outs and claims of success but it's all small fry for the future and what should have been for OpenStack.

Do I think it's all over for the idea of an open source reference model for cloud? Nope. I talked about the importance of open source in forming competitive markets at OSCON 2007 and my view remains as is. I view the work of CloudFoundry as developing such a competitive market in the platform space. However, whilst the IaaS industry maybe lost due to sucky gameplay this is but a temporary state. You should never confuse commoditisation with centralisation because the means of production can yoyo between centralised and de-centralised.

It is perfectly possible that in a decade or so, as the industry becomes more settled and we've come to accept those standards that have been created for us that we will then see a movement towards more decentralised IaaS provision through a competitive market. This is where Apache CloudStack (possibly Eucalyptus) will succeed if momentum can be maintained. It's the usual long slow haul of open source (think 10 -15 years) and one which could have been avoided but in this case wasn't.

So who will succeed? Well, beyond Amazon, Google and MSFT then I see positive futures for AWS clones and for niche market provision of private clouds (especially Eucalyptus, CloudStack and parts of OpenStack). In the longer term, I still favour a competitive market of IaaS providers around an open source reference model but that'll be a good decade or more away.

--- Note, 8th October 2015

Eucalyptus and CloudScaling (focused on being AWS clones) got acquired. We've still to see any large scale public AWS clone launch (it's become way too late now to mount any serious effort). I'm disappointed here as I was expecting at least sometime to try this obvious game. There's some niches for OpenStack in the network equipment vendor space (due to NFV). CloudFoundry continues to develop well but the past giants are still being half hearted about this. People keep on telling me that the future for private cloud is bright ... I don't think so, I think some are living in la la land.

--- Note, 8th October 2015

Eucalyptus and CloudScaling (focused on being AWS clones) got acquired. We've still to see any large scale public AWS clone launch (it's become way too late now to mount any serious effort). I'm disappointed here as I was expecting at least sometime to try this obvious game. There's some niches for OpenStack in the network equipment vendor space (due to NFV). CloudFoundry continues to develop well but the past giants are still being half hearted about this. People keep on telling me that the future for private cloud is bright ... I don't think so, I think some are living in la la land.

Accounting for change

This is an old post, repeated once again because I'm flabbergasted by some of the practice that goes on in IT due to a recent event.

Tl;dr : You always add exit costs to the originating system.

When comparing two systems you have numerous costs including licensing, maintenance, installation, support and exit that need to be considered. In one recent example, a question of whether to upgrade a system or switch to a more open alternative, a blatant example of misappropriation occurred. The figures are provided in table 1.

Table 1 - Costs of System

Tl;dr : You always add exit costs to the originating system.

When comparing two systems you have numerous costs including licensing, maintenance, installation, support and exit that need to be considered. In one recent example, a question of whether to upgrade a system or switch to a more open alternative, a blatant example of misappropriation occurred. The figures are provided in table 1.

Table 1 - Costs of System

| Name | License / Maintenance / Support | Length of Term | Installation Costs | Cost (solution) |

| System A2 | £3 million p.a. | 5 years | £1 million | £16 million |

| System B | £100,000 p.a. | 5 years | £20 million | £20.5 million |

In the above, upgrading system A1 to system A2 (which were the same system, just the latest version) incurred a high annual license / maintenance fee but small installation costs due to similarity of the system. However, migrating to an open source system (in this case system B) whilst reducing annual fees incurred a massive installation cost due to the cost of changing systems which worked with the existing installations. Hence at first glance, the sensible thing to do is simply upgrade to System A2.

This is woefully wrong.

Let us take Table 1, add in the exit costs and include System A1. See Table 2.

Table 2 - Costs of System

| Name | License / Maintenance / Support | Length of Term | Installation Costs | Exit / Migration Cost |

| System A1 | Unknown | Unknown | Unknown | Unknown |

| System A2 | £3 million p.a. | 5 years | £800,000 | £200,000 |

| System B | £100,000 p.a. | 5 years | £300,000 | £19.7 M |

In the above, we have no data for the original system A1 and the majority of the costs involved in installing System B involved conversion of other systems which had previously worked with System A1. I've put this into a column Exit Costs. For System A2, the costs involved with converting other systems is lesser due to the similarities between system A1 and A2.

Now, the first thing to note is that the majority of the costs involved in System B have nothing to do with System B. Instead they're costs of moving from another solution. System B itself because it uses open standards is relatively easy to move to and from. What we're in effect doing is transferring a liability created by one set of systems (i.e. A1 / A2) to another (B) which makes the latter more unattractive. It's a bit like someone saying that because Wind power is replacing nuclear that wind power should absorb the costs of nuclear decommissioning. Only in the world of IT does this accounting slight of hand seem to work.

What should happen is exit costs should be applied to the originating system. Now, if we adjust this taking into consideration the cost of transferring an open standards system B to an equivalent and how use of any system will create continued and increasing exit costs then we get to something like table 3.

Table 3 - Cost of System

| Name | License / Maintenance / Support | Length of Term | Installation Costs | Exit Cost (upgrade) |

Exit Cost (migration) | Cost (solution) |

| System A1 | Unknown | Unknown | Unknown | Unknown | £19.7 million | Unknown |

| System A2 | £3 million p.a. | 5 years | £800,000 | £200,000 | > £19.7 million | > £35.7 million |

| System B | £100,000 p.a. | 5 years | £300,000 | £100,000 | > £100,000 | > £1 million |

In the above, the cost of upgrading from A1 to A2 incurs the exit cost of upgrade, the license / maintenance and support fees and the installation costs. The long term liability (exit cost) to another system is continued and in effect will only increase.

Migrating from system A1 to B will incur low costs of license / maintenance and support but also a longer term lower liability of exit cost since system B uses open standards. We can estimate a cost of upgrade from one open standards based solution to another and I've added a figure for this.

The total cost of solution B is vastly lower than upgrading to system A2. The only reason why it didn't appear so in the first instance is we transferred an originally unaccounted liability associated with exit from system A1 and discounted it from the upgrade path of A1 to A2 by adding it to the migration path of A1 to B. The only thing that will happen in this circumstance is you will become further locked into the vendor of system A1 / A2 and your future liability will just increase.

By forcing such exit costs to be calculated and added to the originating project, you also force vendors to attempt to reduce your exit costs by including more open data, open standards efforts. If you don't do this and allow exit costs to be misapplied then you only create incentives for vendors to lock-in you in further.

Yes, in terms of cash the cost of implementing System B will be more expensive but that's only because those who installed System A1 didn't account for the exit costs. We learned these lessons a long time ago.

If you're still adding liabilities from exit costs to the wrong project (usually disguised as costs of migration / transition to) then :-

1) Please, never, ever run a piece of critical national infrastructure.

2) Please go find someone to help run your IT for you. It'll save you money long term if you consider exit costs in purchasing.

Every single IT project creates a future liability, a cost of exit. When someone tells you that you can't move to a more open system (i.e. open data formats etc) because the cost of migration is too high then this normally means someone hasn't been doing their job and accounting for, managing and mitigating against this future liability.

Every single IT project creates a future liability, a cost of exit. When someone tells you that you can't move to a more open system (i.e. open data formats etc) because the cost of migration is too high then this normally means someone hasn't been doing their job and accounting for, managing and mitigating against this future liability.

Friday, November 22, 2013

Good things come in threes ...

First, an excellent post by +Bernard Golden on AWS vs CSP which reminds me of the whole "Innovation" debate. Key to the process of commoditisation is the formation of standard interfaces providing good enough commodity components and then innovation moving above (new things that are built) and below the interface (operational efficiency).

Second, another excellent post but this time by +Geoff Arnold on "Whither Openstack?"

Lastly, a great quote on Enterprise Cloud by Jack Clark "It's a marketing term. No one has ever convinced me otherwise."

So, I couldn't resist. From an old presentation in 2010 (without the typo).

The Demand.

The problem is that what people want is the benefits of efficiency and agility created through volume operations and commodity components but they don't want to occur the cost associated with co-evolution of practice (i.e. N+1 to design for failure, scale up to scale out etc). So they want the new but provided like the old hence avoiding any need to re-architect the legacy.

It doesn't work like that and why the term "Enterprise Cloud" can be so misleading. A better choice of words is still virtual data centre (VDC).

Second, another excellent post but this time by +Geoff Arnold on "Whither Openstack?"

Lastly, a great quote on Enterprise Cloud by Jack Clark "It's a marketing term. No one has ever convinced me otherwise."

So, I couldn't resist. From an old presentation in 2010 (without the typo).

The Demand.

The Answer

The problem is that what people want is the benefits of efficiency and agility created through volume operations and commodity components but they don't want to occur the cost associated with co-evolution of practice (i.e. N+1 to design for failure, scale up to scale out etc). So they want the new but provided like the old hence avoiding any need to re-architect the legacy.

It doesn't work like that and why the term "Enterprise Cloud" can be so misleading. A better choice of words is still virtual data centre (VDC).

Wednesday, November 20, 2013

Without a map you have no strategy

To paraphrase a recent conversation.

Vendor : we're going to attack this space in Q1 next year.

Me: Excellent, that's when you're going to do something but what are you going to do?

Vendor: we're going to launch a cloud based service

Me: Fine, that's what you're going to do and I'll ignore the vagueness here but how are you going to do it?

Vendor: we're hiring talented people to build our system which we might build in an agile way using open source.

Me: Ok, that's sort of how and I won't go into the details because it all sounds a bit suspect, I'll just ask you why?

Vendor: because our competitors are launching cloud services and we need to be in the market.

Me: that's not really a good example of why. What I'm looking for is why here over there?

Vendor: because everyone else is launching ...

Me: ... no, you're just repeating yourself. Why attack this space over another? There are multiple "where" that you can attack, why this one over another?

Vendor: not sure I follow you?

Me: do you have a map of the landscape identifying user needs and how things are changing?

Vendor: what's a map?

Me: Ok, we can assume that's a no then and this is probably the root of your problems. When trying to write a strategy then the first thing you have to do is map out a landscape for the area that you're looking at. To do this always start with user needs. Second, once you have a map then you can determine where you might attack. Third, once you know where then you can determine why here over there. Then you determine the how, what and when. Without a map then you don't have much of a strategy just a tyranny of how, what and when and basically you're just playing chess in the dark.

Vendor: we do have a strategy, we're launching our new cloud service next quarter.

Me: so is everyone else and not one of you seem to know why or have determined the needs.

Vendor: we do know our users, it's written in the strategy.

Me: I could tell you a joke about a general bombarding a hill because "67% of successful generals bombard hills" but you'd probably miss the point. You don't have much of a strategy since you're just doing this because others are and you have no way of better playing the game than this.

... and so on.

Can, I please reiterate to people the importance of :-

- Map your environment (starting with user needs)

- Determine where you can attack (the options)

- Determine why you would attack one space over another (i.e. the games you can play, the advantages / disadvantages, the opponents, how you can exploit inertia or constraints, possibilities for building ecosystems etc)

- Determine the how (i.e. the method - using an open approach, co-creation with others, alliances)

- Determine the what (the details) and finally the when.

If you don't first map your landscape, you can and only ever will be playing chess in the dark.

Sunday, November 17, 2013

Mapping, Competitive Advantage and Kodak Moments.

Throughout this blog and my talks, I've covered the question of mapping (understanding the board), the rules of the game (economic change) and given examples of game play. Mapping is critical to understanding the environment you exist within and "where" you might attack, "why" being a relative statement of why here over there.

However, I want to put up a warning because I was recently asked whether mapping itself can be a source of competitive advantage or differential.

Tl;dr Rarely can mapping be a source of competitive advantage, it's more a survival tool.

Back in 2005, myself, James Duncan and Greg McCarroll (who recently passed away) mapped out Fotango, the company I was CEO for, and used the map to determine where we should attack in the future. From this, the project which became known as Zimki - a platform as a service play - was born along with all the gameplay around it.

The map is provided in figure 1. This is not the original but a simplified version which I've tidied up adding more recent terms like "uncharted" and "industrialised" to describe the different domains. The map describes the value chain, the evolutionary state and inherent in this was :-

1) How activities evolves and characteristics changed, leading to agile being useful on one side of the map and six sigma on the other.

2) Inertia to change that organisations have.

3) Componentisation effects leading to higher order systems.

4) Use of ecosystems (in particular models like innovate-leverage-commoditise)

5) Use of open as a tactical weapon to manipulate the map

Figure 1 - The Fotango Map.

The single most important thing you can take away from the map however is the date - 2005. There are people out there who have almost a decade of experience in playing these games within companies. James fortunately works for UK Gov these days but there are many others who have long and detailed experience of this.

Which brings me back to the question of competitive advantage. Certainly mapping can provide an advantage when you're playing a "blind chess" player (a category of companies we define as 'chancers') which admittedly many companies do an excellent job of emulating. But there are some very dangerous foes out there. If you think mapping alone is going to give you an advantage over some of the big players in the cloud space then think again. Some of these companies are run by very accomplished chess players.

In these cases, I'd be using mapping to find another route by which you can build a company and avoid any head on confrontation - you will tend to lose, they will tend to outplay you, they have the experience and they've built highly defensible positions and strong ecosystems. Knowing when to withdraw from a battle to conserve resources in order to fight on another front is one of the hardest things I know for a CEO to do. No-one likes to admit defeat, no CEO likes to accept they've been outplayed and many companies are lost pursuing conflict driven by ego.

More than inertia, poor situational awareness and chess play - the hubris involved and continued pursuit of the un-winnable battle is what I like to call the "Kodak Moment". Whilst it is common for people to state "but Culture eats strategy for breakfast" just remember that whilst culture is always important, there are times in the economic cycle where strategy is more important.

We are in one of those times within the IT field (including every industry where IT is a main component of its value chain). There is no hiding place here. The cause of company failure whilst it will be blamed on lack of engineering talent or poor culture or inertia is not caused by this. Companies will principally fail because of poor chess play by 'peace' time CEOs.

We are in one of those times within the IT field (including every industry where IT is a main component of its value chain). There is no hiding place here. The cause of company failure whilst it will be blamed on lack of engineering talent or poor culture or inertia is not caused by this. Companies will principally fail because of poor chess play by 'peace' time CEOs.

Tuesday, November 12, 2013

Somewhat bored

I find many of the moves made in the technology industry frustrating especially when there are obvious plays. Back in 2008, I told Dell / IBM / HP about the threat of AWS, their use of a learning model similar to ILC (see below) and how they could be attacked.

AWS back then had a constraint in terms of building data centres, by creating an AWS clone and forcing a price war then you could increase demand (compute infrastructure being elastic) beyond Amazon's ability to supply and naturally fragment the market. Did they do this? No, they fiddled whilst Rome burned.

Ok, I'm not expecting any of these companies to listen but having mapped it out, some advice - whether welcome or not.

Versus Android / Samsung

Android is becoming a market dominant and Samsung is the biggest player in this space. Samsung is also heavily supporting Tizen but this really isn't a challenger to Android, it's simply about Samsung's buyer / supplier relationship and power games. Google is hardly going to pay a great deal of attention and Samsung is vulnerable to product versus product substitution.

Android's weakness is Google, who whilst they are ok at platform / ecosystem plays are hardly the best players. The ecosystem around Android is mainly centred on the App Store but despite all the hype on "Apps will rule the world", these type of ecosystems are incredibly poor learning exercises. This matters.

Take for example Amazon EC2. This is a component ecosystem i.e. Amazon provides components as utility services for others to use. What those companies build varies from applications to other component services. This provides a learning opportunity for Amazon. As new component services diffuse, Amazon can leverage the ecosystem by examining consumption information (i.e. core consumption data on underlying APIs) to identify new successful components which they can commoditise themselves. This is the heart of ILC - (get others to) Innovate, Leverage (the ecosystem) and Commoditise (to components). App stores don't have this as the App tends to be the end state i.e. Apps are rarely used as components. They also tend to be domain specific i.e. fixed to a device. App Stores are hence very weak ecosystems compared to component ecosystems.

So, you have one company which is vulnerable to product vs product substitution and a weak ecosystem play. This can be attacked.

I won't go through the details of how but I'll shoot to the end.

Microsoft / Nokia, Lenovo, HTC, China Telecom, Ericsson (after buying BlackBerry) and others in Canonical's Carrier Advisory Group should make a play around Ubuntu Touch. Forget the past, Ubuntu has a large development ecosystem, they're positioned strongly, there's ample opportunity for intelligent software agents and this route is viable - even for Microsoft.

Versus AWS

Forget the IaaS play or the transitional private cloud play - you've lost. Lick your wounds and leave the whole infrastructure battle to a later substitution play. It's far better to accept losing this one battle, conserve your resources and try to win the overall war. You're up against a well established player - Amazon - who knows how to play the game and use ecosystems. You need to change this to your advantage.

Again, I'll shoot to the end, ignoring the details.

IBM / HP / Dell et al need to make a massive play around Cloud Foundry and by that I mean building large public PaaS built on AWS. You need to drive AWS to an invisible component, grab the ecosystem through a common market and then over time look to substitute AWS at the infrastructure level and hence balance supplier / buyer relationship. You can exploit your position on building on AWS to prevent AWS playing games against you whilst you build the substitution play.

On Ubuntu

In both the examples, Ubuntu is prominent. Before you ask "why not RedHat?", well they're poorly positioned on both fronts - great for the past, weak for the future. As for Ubuntu, the above companies should make the plays and create a JV to buy out Ubuntu.

Canonical is the wedge to achieve both plays, you don't have much time to do this - Google is building components, AWS could make a strong platform play by buying Salesforce though fortunately Amazon needs to invest so much due to AWS growth that they may be unable to do this. Hence, fast action using Ubuntu as the wedge to create a market which favours you should be the order of the day.

Canonical is the wedge to achieve both plays, you don't have much time to do this - Google is building components, AWS could make a strong platform play by buying Salesforce though fortunately Amazon needs to invest so much due to AWS growth that they may be unable to do this. Hence, fast action using Ubuntu as the wedge to create a market which favours you should be the order of the day.

I can go through the game play in more details, some of the nuances, how you build competing ecosystems through co-opetition etc but that's not the point. My point is AWS and Android will only win if others let them and there is time for this game to be changed if you play smart and work together.

Saturday, November 09, 2013

Oh not again - should we be an agile or six sigma shop?

I was recently asked this question and to be honest, I was flabbergasted. Understanding when to use agile and when to use six sigma (or some other highly structured method) is almost a decade old problem which is well known.

However, I was asked to write a post on this and so with much gnashing of teeth, I give you the stuff which you should already know and if you don't then please stop trying to write systems and go and do some basic learning instead.

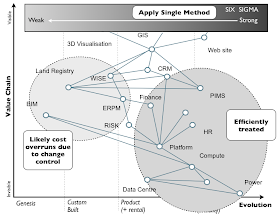

First, every system of any reasonable scale consists of multiple components. Those components are all evolving (due to the effects of competition) and you can (and should) map out the components of any system before embarking on trying to build it. Below in figure 1 is a basic map from a heavy engineering project with a large IT component.

Now, all the components are evolving from left to right and as they do so their characteristics change - they move from an uncharted space (the novel, the chaotic, unpredictable, uncertain, potential differential) to the more industrialised (the common, appearance of linear order, the predictable, the certain, the cost of doing business). See figure 2.

Figure 2 - Basic Characteristics

Agile (e.g. XP, Scrum) and Structured (e.g. Six Sigma, Prince 2) methods are suited to different problem domains. Agile, through the use of test driven development attempts to reduce the cost of change throughout a projects life to a baseline but it does so at a slight cost compared to the cost of change in the requirements / design phase of a structured method. An approximate of this is given in figure 3.

Figure 3 - Cost of Change by Method

Hence for those uncharted activities where there is rapid change (due to their properties i.e. they're uncertain and we're discovering them) then Agile is the most appropriate method because whilst it might have a higher cost of change compared to the requirements / design phase of a structured method, there will however be change throughout the project's life.

Alternatively, those more industrialised acts that are defined, well understood and highly repeatable are more effectively managed by a highly structured method because change shouldn't exist and where it is necessary it should be eliminated in the requirement / design phase. This leads to a situation where different methods (e.g. Agile, Six Sigma) are suitable for different evolutionary stages - see figure 4.

Figure 4 - Method by Evolutionary Stage

So when examining your map, it should be fairly obvious that you need multiple methods with two extremes of Agile (e.g. XP) including development in-house and Six Sigma with potential for outsourcing. For those transitional activities (i.e. products) then you should be using products and minimising configuration / waste / unnecessary change where possible which requires another set of methods. Hence for our heavy engineering project we could use the following - see figure 5.

The anomaly in the above is 'web site' being a commodity but using Agile methods. This is because maps are imperfect and 'web site' is in fact a value chain of multiple activities, some of which are more commodity whilst others are novel and new. This needs to be broken down and in the case of the engineering project it was. One final note is that all the activities are evolving due to competition and hence the method you will use for one activity will change as it evolves.

Misapplication of methods will have costly effects. For example, if you outsource the entire value chain described by the map to a third party then you're likely to settle on a structured method because you'll want 'guarantees' of what is being delivered. The net effect of applying a single structured method (such as six sigma) across the entire value chain is that whilst some activities will be effectively treated, you'll also be applying structured methods to activities that are rapidly changing. The end result will always be cost overruns due to change control costs associated with structured methods i.e. you're applying a method designed to reduce deviation to an activity that is constantly deviating - see figure 6.

Figure 6 - Single Method.

At Fotango we went all agile in 2001 and then by 2003 had learned our lesson - multiple methods were needed. I first spoke about this need for multiple methods at a public conference in 2004 and have repeated this countless times. Since about 2007, the use of multiple methods and application of appropriate methods has increasingly become common. The above is of course a fairly basic interpretation.

It's 2013 now ... come on people get with the program, we've had almost a decade of this agile versus six sigma nonsense! It's really only the very extreme of the laggards who describe themselves either as an 'Agile' or 'Six Sigma' shop. There is no 'one size fits all' and you need to use methods where appropriate.

On a personal note

I'm extremely disappointed to be asked this 'Agile vs Six Sigma' question in 2013. The next person will get a boot up the backside from me. Either learn your techniques and how and when to apply them or find another job where you'll do less damage.